In December 2024, a fake video with Elon Musk appeared, stating that he was giving away $20 million in cryptocurrency. The video seemed like Musk was promoting the giveaway and urging the viewers to send money to participate. This fake news was then shared on various social media accounts, and many people took it to be true.

The raised questions about the potential use of deepfake technology to commit fraud and the importance of developing better awareness to distinguish between the truth and false information. In a similar light, in January 2024, the UK-based engineering consultancy Arup became a victim of an advanced deepfake fraud, which cost the company more than USD 25 million.

Employees of the company fell victim to deepfakes during a video conference where the impersonators of their Chief Financial Officer and other employees authorized several transactions to bank accounts in Hong Kong. This incident shows how deepfake technology poses a serious threat to business organizations and why there is a need to have better security measures in organizations. Blockchain, the technology behind cryptocurrencies, might be a powerful tool to fight back. Let’s explore how decentralized verification, immutable records, and trustless systems could make false content easier to catch and stop.

Why AI‑Generated Misinformation Is Dangerous

Deepfakes and other types of synthetic media are realistic but fake videos, audio, and images created using AI. They can fool people, damage reputations, and even sway votes. According to research, deepfakes now make up 2.6% of online content; up from 0.2% just a year earlier. That is scary, especially when fake news and AI hallucinations can spread like wildfire.

The reason they’re so easy to create is that powerful AI models can produce convincing speech and visuals. Once a video is posted, it becomes part of social media, where it can spread fast before fact-checkers even see it, and because there’s so much information online, many people can’t tell what’s real and what’s fake anymore.

How Blockchain Can Help

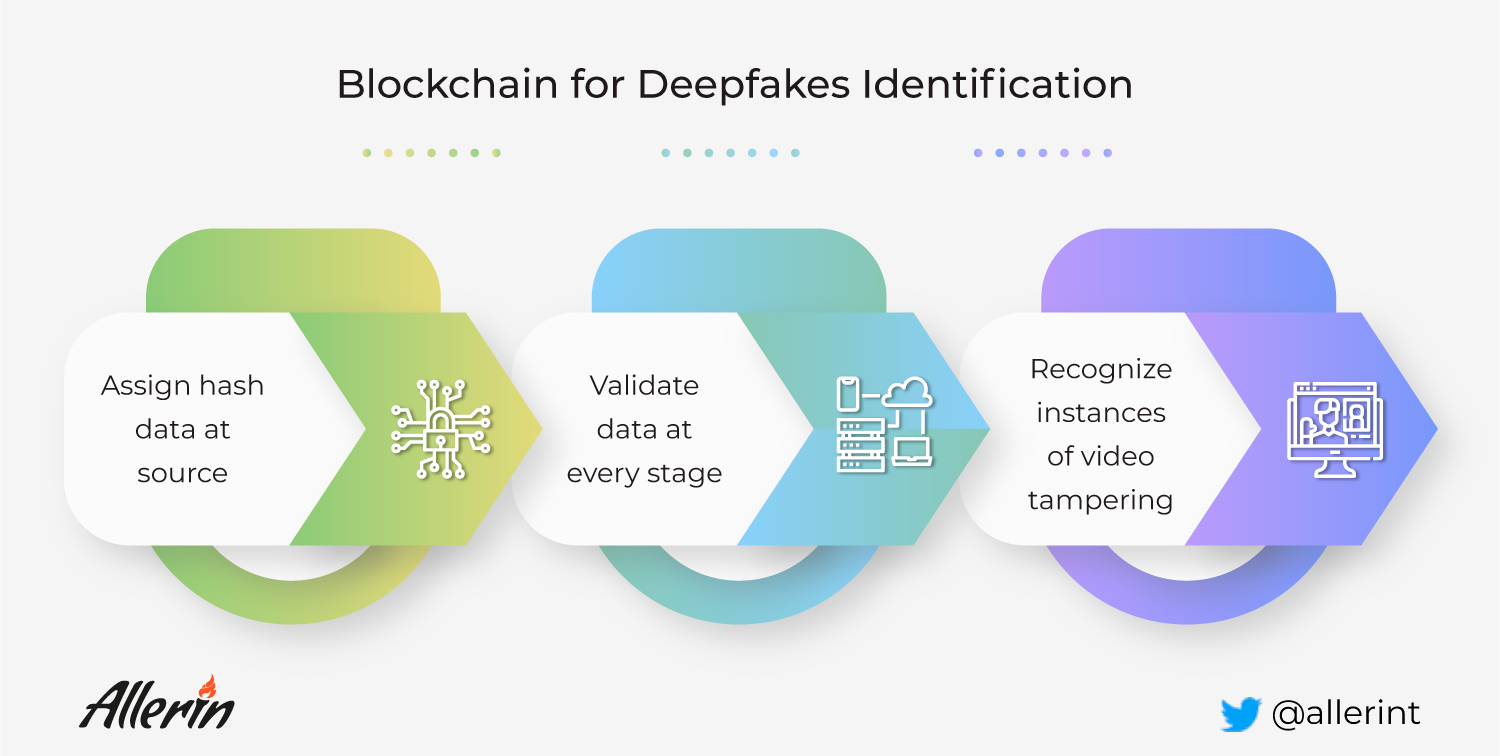

Blockchain technology offers several key features that can help us tackle this problem:

1. Content Provenance and Immutable Records

Blockchain can create a permanent history of content, called content provenance, when a video or image is created, its details, like who made it and when, can be stored as a hash on the blockchain. A hash is like a fingerprint that proves the file hasn’t changed and as such, anyone can match a file to its blockchain record to check if it’s real .

For example, the Amber Authenticate project timestamps hashes of police footage on Ethereum. That way, if someone tries to tamper with the video later, you can detect it by comparing the new hash to the original.

2. Decentralized Verification and Trustless Systems

Blockchain is decentralized, meaning no single person or company controls it and instead, many independent nodes verify transactions and store the data. This creates trustless systems, where people don’t need to trust a single authority, they only need to trust the math and code.

A startup called Witness is using blockchain to make websites verifiable, and they log proof that a photo or article existed at a certain time on-chain. That helps fight misinformation by showing “This content is real, recorded at this exact time”.

3. Decentralized Identity (DID) and Verifiable Credentials

Systems like DIDs and verifiable credentials can sign media content so it’s easy to confirm who created it. For example, if a news outlet signs a video using its DID, viewers can check the signature to make sure it’s authentic. This makes it harder for deepfakes to appear real.

4. Zero‑Knowledge Proofs and Smart Contracts

Zero‑knowledge proofs (ZKPs) allow you to prove something is true without revealing all the data, which means that if a video’s hash matches what’s on-chain, a ZKP can show it’s authentic without sharing the entire file, keeping privacy intact .

Smart contracts can also help. For instance, a smart contract could refuse to display a video unless it’s verified with on-chain credentials. That’s a kind of Web3 fact-checking built into the system.

Real‑World Projects in Action

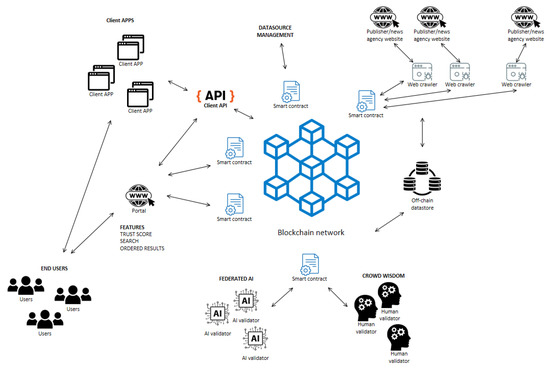

Several real projects are already experimenting with these ideas:

- Struck Capital explained how public key cryptography and blockchain can help detect deepfakes by tracking original source data on-chain

- Forbes highlighted a system combining AI (for deepfake detection) and blockchain (for recording authenticity), helping in areas like identity verification (KYC) .

- CoinDesk reports that blockchain can help verify what’s true in AI‑generated media by timestamping and authenticating source material .

- Research proposals like DeHiDe use a deep learning model plus blockchain to catch fake news and show article provenance.

- TRUSTD combines media forensics with blockchain-based signatures to let communities validate or reject shared content.

- A recent academic paper discussed a blockchain‑based deepfake detection network with smart contracts and proof mechanisms to ensure accuracy and trust.

Challenges to Overcome

Though powerful, combining blockchain and AI to stop misinformation faces several hurdles:

1. Speed and Cost for On‑Chain Data

Blockchains are slow and expensive and hence it doesn’t make sense to store every video or image file on-chain. Instead, developers use a hybrid model: they keep full content off-chain but log hashes or metadata on-chain. Projects like OpenTimestamps do this by batching many timestamps into a single block to reduce costs.

2. Adoption and Developer Tools

Plenty of apps and media producers need easy tools to create signatures, store them, and check content. Currently, work is scattered, but tools like Amber Authenticate and Witness make it easier for journalists and developers to build verification into their platforms.

3. AI Evasion and Adversarial Attacks

Deepfakes are AI-powered and becoming smarter and some adversarial AI may try to fool blockchain-based verifiers by slightly altering content. While immutable logs help detect tampering, there’s still a risk before verification happens .

4. Legal Recognition and Standards

Even if content is verified on-chain, we need legal frameworks to make sure it’s accepted in courts or by regulators. This requires global cooperation and adoption of standards for digital signatures, time‑stamps, and media integrity.

Why It Matters

Blockchain plays an important role in helping people trust what they see and hear online. In democratic societies, false information can be very harmful, especially during elections. For example, deepfakes can be used to make it look like a candidate said something they didn’t, which could influence how people vote, but if the content is verified using blockchain, it becomes much easier to know what is real and what is fake. This helps protect the fairness of elections and keeps the public informed with accurate information.

For the media and news outlets, blockchain offers a way to prove that videos, articles, and images are original and unedited. Social media platforms can also use blockchain tools to label verified posts and warn users about suspicious or fake content. This kind of content authenticity builds trust between the media and the public.

Public safety is another reason why blockchain is important. In emergency situations, AI-generated misinformation, like fake videos of disasters or false alarms and can cause fear or confusion. If news content can be verified quickly using blockchain, it helps communities stay calm and get the correct information.

Finally, personal reputation matters, mostly because some deepfakes target individuals by showing them saying or doing things they never did. This can damage their image and even lead to bullying or legal trouble. Blockchain can help prove the truth by verifying when and how the original content was created. That way, people can protect themselves against false claims and digital manipulation.

What’s Next

In the coming years, we might see:

- Integrated Tools: Social media platforms, cameras, and smartphones might include blockchain verification by default.

- Decentralized Identity (DID): Creators may use DIDs to sign their content automatically.

- Hybrid Storage: Full video stored on IPFS or decentralized cloud, with hashes on blockchains like Ethereum or Bitcoin.

- Fact‑Checking Networks: DAOs and automated systems work together to validate data and detect fake media in real time.

- Legal Standards: Governments and media organizations agree on trusted timestamping, verification, and legal weight of digital signatures.

In summary

Combating AI-generated misinformation is a major challenge, but the power of blockchain lies in its ability to create content provenance, immutable records, and decentralized verification systems that anyone can trust. By combining these features with AI tools, DIDs, ZKPs, and smart contracts, we can build a future where it’s easy to tell what is real, and what is a deepfake.

We may not be able to stop deepfakes completely, but blockchain gives us a fighting chance, with the next step being widespread adoption, through easy-to-use tools, regulatory support, and community action.

Disclaimer: This article is intended solely for informational purposes and should not be considered trading or investment advice. Nothing herein should be construed as financial, legal, or tax advice. Trading or investing in cryptocurrencies carries a considerable risk of financial loss. Always conduct due diligence.

If you want to read more market analyses like this one, visit DeFi Planet and follow us on Twitter, LinkedIn, Facebook, Instagram, and CoinMarketCap Community.

Take control of your crypto portfolio with MARKETS PRO, DeFi Planet’s suite of analytics tools.”